In-Cloud Document Editor

Work from anywhere with secure cloud-based document storage—your files are always available when you need them.

Write Documents with AI-powered writing assistance. Get better results in less time.

Try WordGPT Free

An in-depth look at OpenAI's GPT-4.5, focusing on its advancements in unsupervised learning, emotional intelligence, and real-world applications. Explore its technical breakthroughs, practical uses, and transformative potential in the AI landscape.

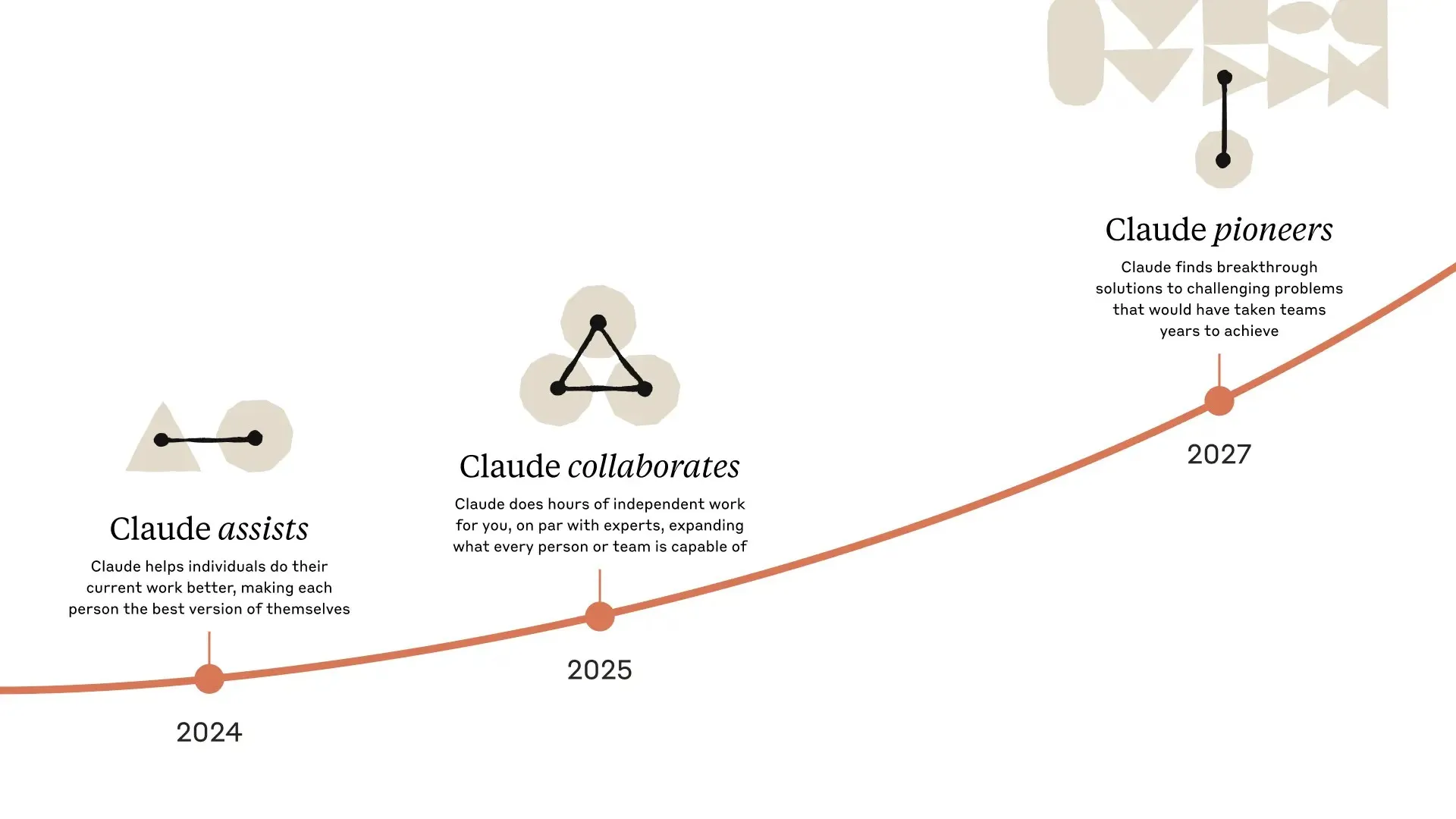

A comprehensive exploration of Anthropic’s Claude 3.7 Sonnet, the first hybrid reasoning AI model, and Claude Code, a command-line tool for agentic coding. Discover its technical breakthroughs, real-world applications, and transformative potential in the AI landscape.

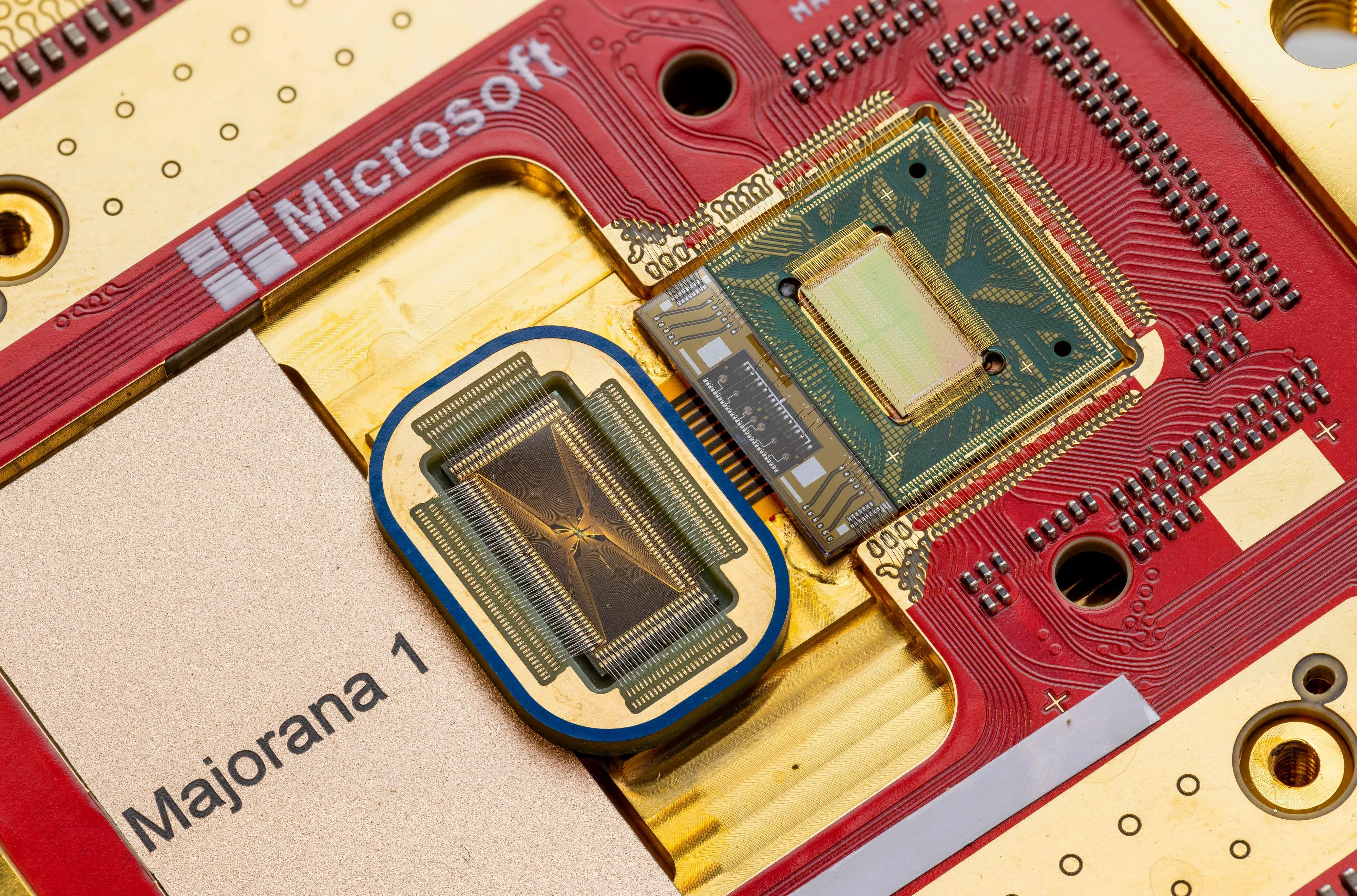

A comprehensive exploration of Microsoft’s Majorana 1 quantum processor, powered by topological qubits. From its physics and potential applications to its competitive edge and societal impact, discover how this breakthrough could redefine technology—and how WordGPTPro can enhance your productivity.

Explore the comprehensive report on xAI's Grok 3 launch, its unprecedented benchmark performance, DeepSearch capabilities, and access options. Discover how Grok 3 outshines competitors—and how you can try it for free before subscribing.

In-Cloud Document Editor

Work from anywhere with secure cloud-based document storage—your files are always available when you need them.

AI-Powered Writing Assistant

Leverage advanced AI to generate high-quality content, enhance your writing, and refine your ideas effortlessly.

Lightning-Fast Document Creation

Write a full page faster than you can read it—AI accelerates your workflow like never before.

AI-Powered Rephrasing

Instantly transform your text to match different tones—assertive, polite, or anything in between.

DOC & HTML Export

Seamlessly export your work in .doc or HTML formats for easy integration with blogs and editors.

User-Friendly Interface

Experience an intuitive, in-line paragraph editor that helps you refine and expand ideas effortlessly.

Write Documents with AI-powered writing assistance. Get better results in less time.

Try WordGPT FreeYou can build your customer support chatbot in a matter of minutes.

Get Started