NVIDIA AI Foundry Builds Custom Llama 3.1 Generative AI Models for the World’s Enterprises

Enhance Your Writing with WordGPT Pro

Write Documents with AI-powered writing assistance. Get better results in less time.

Try WordGPT FreeIntroduction

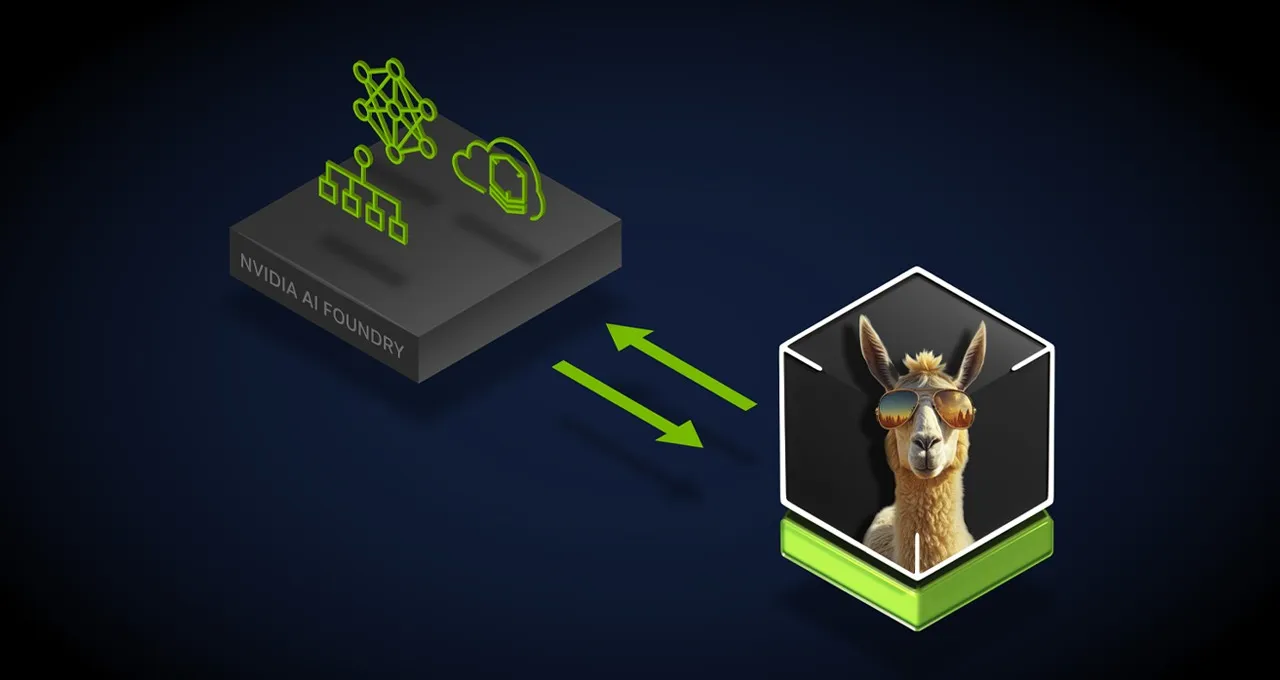

NVIDIA has announced the launch of its new service, the NVIDIA AI Foundry, which enables enterprises and nations to create custom generative AI models using Llama 3.1. This innovative offering combines the power of Llama 3.1 405B and NVIDIA’s Nemotron models to help businesses develop domain-specific supermodels tailored to their unique needs.

Overview of NVIDIA AI Foundry

What is NVIDIA AI Foundry?

The NVIDIA AI Foundry is a comprehensive service designed to facilitate the creation, deployment, and management of generative AI models. It provides a robust framework that supports various stages of model development, including curation, synthetic data generation, fine-tuning, retrieval, guardrails, and evaluation.

Key Features

- Custom Model Creation: Enterprises can leverage their own data along with Llama 3.1 models to build customized generative AI applications.

- Scalability: Powered by the NVIDIA DGX Cloud AI platform, the service can easily scale compute resources to meet changing AI demands.

- Integration with Existing Infrastructure: The NVIDIA NIM microservices allow for easy deployment across various MLOps and AIOps platforms.

The Significance of Llama 3.1

Introduction to Llama 3.1

Llama 3.1 marks a significant milestone in the world of generative AI. With its robust architecture and capabilities, it allows businesses to create advanced AI applications that are finely tuned to their specific domains.

The Role of Open Source

The release of Llama 3.1 as an open-source model has important implications for the adoption of generative AI across different industries. By providing accessibility, it encourages experimentation and innovation.

Use Cases of Custom Llama 3.1 Models

Industry Applications

- Healthcare: Custom Llama models can assist in patient diagnosis and personalized treatment plans.

- Finance: They can enhance fraud detection systems and customer service interactions.

- Retail: Personalized marketing and customer engagement can be significantly improved using generative AI models.

Government and Sovereign AI Strategies

Nations developing sovereign AI strategies can utilize Llama 3.1 models to create systems that reflect their cultural and societal needs, thereby fostering a more inclusive AI landscape.

Accenture: A Case Study

Partnership with NVIDIA

Accenture is one of the first companies to adopt the NVIDIA AI Foundry service. They are using the Accenture AI Refinery framework to build custom Llama 3.1 models, enabling enterprises to deploy AI applications tailored to their specific requirements.

Benefits to Clients

Through this partnership, Accenture can help clients realize the potential of generative AI by quickly deploying models that reflect their unique cultural and industry contexts.

NVIDIA NIM Microservices

What are NIM Microservices?

NVIDIA NIM microservices are designed to facilitate the deployment of Llama 3.1 models in production environments. They enhance throughput and ensure seamless integration with existing systems.

Advantages of NIM Microservices

- High Throughput: Up to 2.5x higher throughput compared to traditional deployment methods.

- Streamlined Deployment: Simplifies the deployment process, allowing businesses to focus on building applications rather than managing infrastructure.

Advanced Retrieval Capabilities

Enterprises can pair Llama 3.1 NIM microservices with new NVIDIA NeMo Retriever NIM microservices to create state-of-the-art retrieval pipelines for AI copilots, assistants, and digital human avatars.

New NeMo Retriever RAG Microservices Boost Accuracy and Performance

The introduction of NVIDIA NeMo Retriever NIM inference microservices for retrieval-augmented generation (RAG) marks a significant advancement in the accuracy and performance of generative AI applications. By integrating these microservices into their deployments, organizations can enhance the response accuracy of their customized Llama supermodels and Llama NIM microservices in production environments.

Enhancing Response Accuracy

Using the new NeMo Retriever microservices, organizations can ensure that the responses generated by their Llama models are more contextually relevant and precise. This capability is crucial for applications requiring high levels of accuracy, such as customer support chatbots, virtual assistants, and other AI-driven interfaces that interact with users.

Superior Retrieval Accuracy

When combined with NVIDIA NIM inference microservices for Llama 3.1 405B, NeMo Retriever NIM microservices deliver the highest open and commercial text Q&A retrieval accuracy for RAG pipelines. This means that organizations can expect their systems to effectively retrieve and present information in response to user queries, leading to a more satisfying user experience.

Application Scenarios

The enhanced capabilities provided by NeMo Retriever NIM microservices can be applied across various industries, including:

- Healthcare: Improving patient interactions by providing accurate and context-aware responses in telemedicine applications.

- Finance: Enhancing customer service chatbots to deliver precise information regarding account details, transactions, and financial advice.

- Retail: Boosting e-commerce platforms with intelligent assistants that understand customer inquiries and provide tailored product recommendations.

Advanced Model Customization with Nemotron

Utilizing Synthetic Data

The Nemotron models can be used to generate synthetic data, enabling enterprises to enhance model accuracy and create more effective domain-specific models.

Domain-Adaptive Pretraining

Enterprises can further refine their models through domain-adaptive pretraining, ensuring that they meet the specific requirements of their industry.

The Future of Generative AI

Industry Trends

As more enterprises recognize the transformative potential of generative AI, the demand for customized models will continue to grow. The accessibility of Llama 3.1 models paves the way for rapid advancements in AI technology.

NVIDIA’s Commitment

NVIDIA remains committed to supporting the AI ecosystem, providing resources and tools that enable companies to innovate and drive the next wave of generative AI applications.

Comparative Analysis: Llama 3.1 vs. Other Generative AI Models

As the field of generative AI continues to evolve, various models have emerged, each with its unique strengths, architectures, and applications. This comparative analysis focuses on Llama 3.1 and contrasts it with several other prominent generative AI models currently available in the market, including OpenAI’s GPT-4, Google’s PaLM, and Anthropic’s Claude. By examining factors such as architecture, training data, performance, and use cases, we can better understand where Llama 3.1 fits within the broader landscape of generative AI.

1. Architecture

Llama 3.1

- Architecture: Llama 3.1 is built upon a transformer architecture, optimized for both performance and scalability. It supports multiple parameter sizes (8B, 70B, and 405B) to cater to different computational needs and application scenarios.

- Efficiency: Designed for efficient fine-tuning and adaptation, Llama 3.1 allows enterprises to create custom models tailored to their specific domain requirements.

GPT-4 (OpenAI)

- Architecture: GPT-4 is also based on a transformer architecture but utilizes a larger number of parameters, estimated to be in the range of hundreds of billions.

- Multimodal Capabilities: Unlike Llama 3.1, GPT-4 can process both text and images, making it a versatile choice for a wider range of applications.

PaLM (Google)

- Architecture: Google’s PaLM (Pathways Language Model) utilizes a similar transformer architecture, focusing on scaling up parameters to improve performance and understanding.

- Training Techniques: PaLM employs advanced training techniques, such as sparse activation, which allows it to scale efficiently without proportionally increasing computational costs.

Claude (Anthropic)

- Architecture: Claude is also built on a transformer architecture but emphasizes safety and alignment in its design. It employs techniques that aim to reduce harmful outputs and enhance user trust.

- Human Feedback: Claude has been specifically designed to incorporate human feedback into its training process, which helps refine its responses and align them more closely with user intentions.

2. Training Data

Llama 3.1

- Data Sources: Llama 3.1 has been trained on a diverse set of publicly available data, including books, articles, and websites, ensuring a broad understanding of language and context.

- Domain Adaptability: Enterprises can further train Llama 3.1 on proprietary and synthetic data to tailor its capabilities to specific business needs.

GPT-4 (OpenAI)

- Data Sources: GPT-4 has been trained on a vast array of internet text, including curated datasets, ensuring a rich understanding of various topics.

- Ethical Considerations: OpenAI has implemented measures to filter out harmful or biased content during the training phase to promote safer outputs.

PaLM (Google)

- Data Sources: PaLM was trained on a mixture of publicly available text and proprietary data, aiming to achieve a balanced understanding of language.

- Diversity in Data: Google’s approach emphasizes diverse data sources to enhance the model’s understanding across various domains.

Claude (Anthropic)

- Data Sources: Claude utilizes a mix of publicly available data and data from diverse domains to improve understanding and responsiveness.

- Alignment Focus: The training process includes a strong emphasis on aligning model outputs with human values and preferences.

3. Performance

Llama 3.1

- Strengths: Llama 3.1 is known for its efficiency and scalability, delivering high performance in domain-specific applications.

- Customization: The ability to fine-tune Llama 3.1 with proprietary data enhances its performance in specialized tasks.

GPT-4 (OpenAI)

- Strengths: GPT-4 excels in generating coherent, contextually relevant responses across a wide range of topics and formats.

- Benchmarking: It has shown strong performance on various language benchmarks, including reasoning and comprehension tasks.

PaLM (Google)

- Strengths: PaLM demonstrates impressive performance in language understanding and generation, particularly in tasks requiring complex reasoning.

- Scalability: Its unique architecture allows for efficient scaling, which can enhance performance without extensive computational resources.

Claude (Anthropic)

- Strengths: Claude focuses on generating safe and reliable outputs, with a strong emphasis on minimizing harmful or biased content.

- User Trust: The incorporation of human feedback has been beneficial in improving the relevance and safety of its responses.

4. Use Cases

Llama 3.1

- Enterprise Applications: Ideal for businesses looking to create custom generative AI solutions that reflect specific cultural, linguistic, and industry nuances.

- Research and Development: Suitable for academic institutions and researchers developing innovative AI applications.

GPT-4 (OpenAI)

- Creative Writing: Commonly used for generating stories, poetry, and other creative content due to its ability to produce imaginative narratives.

- Customer Support: Employed in chatbots and virtual assistants for its conversational abilities.

PaLM (Google)

- Complex Problem Solving: Utilized in applications that require advanced reasoning and analytical capabilities.

- Cross-Disciplinary Applications: Effective in diverse fields such as healthcare, finance, and education for generating insights and recommendations.

Claude (Anthropic)

- Safety-Critical Applications: Preferred in scenarios where user safety and trust are paramount, such as mental health support or sensitive customer interactions.

- Alignment-Focused Tasks: Utilized in areas requiring high ethical standards and alignment with human values.

Conclusion

The introduction of the NVIDIA AI Foundry and Llama 3.1 represents a significant advancement in the field of generative AI. By empowering enterprises to create custom supermodels, NVIDIA is helping businesses harness the power of AI to drive innovation and improve efficiency across various industries.

Llama 3.1 presents a compelling option for enterprises seeking custom generative AI solutions tailored to their specific needs. While models like GPT-4 and PaLM offer advanced capabilities and versatility, Llama 3.1’s focus on efficiency, domain adaptability, and scalability positions it as a strong contender in the generative AI landscape. Each model has its strengths and ideal use cases, allowing organizations to choose the one that best aligns with their objectives.

References

Free Custom ChatGPT Bot with BotGPT

To harness the full potential of LLMs for your specific needs, consider creating a custom chatbot tailored to your data and requirements. Explore BotGPT to discover how you can leverage advanced AI technology to build personalized solutions and enhance your business or personal projects. By embracing the capabilities of BotGPT, you can stay ahead in the evolving landscape of AI and unlock new opportunities for innovation and interaction.

Discover the power of our versatile virtual assistant powered by cutting-edge GPT technology, tailored to meet your specific needs.

Features

-

Enhance Your Productivity: Transform your workflow with BotGPT’s efficiency. Get Started

-

Seamless Integration: Effortlessly integrate BotGPT into your applications. Learn More

-

Optimize Content Creation: Boost your content creation and editing with BotGPT. Try It Now

-

24/7 Virtual Assistance: Access BotGPT anytime, anywhere for instant support. Explore Here

-

Customizable Solutions: Tailor BotGPT to fit your business requirements perfectly. Customize Now

-

AI-driven Insights: Uncover valuable insights with BotGPT’s advanced AI capabilities. Discover More

-

Unlock Premium Features: Upgrade to BotGPT for exclusive features. Upgrade Today

About BotGPT Bot

BotGPT is a powerful chatbot driven by advanced GPT technology, designed for seamless integration across platforms. Enhance your productivity and creativity with BotGPT’s intelligent virtual assistance.