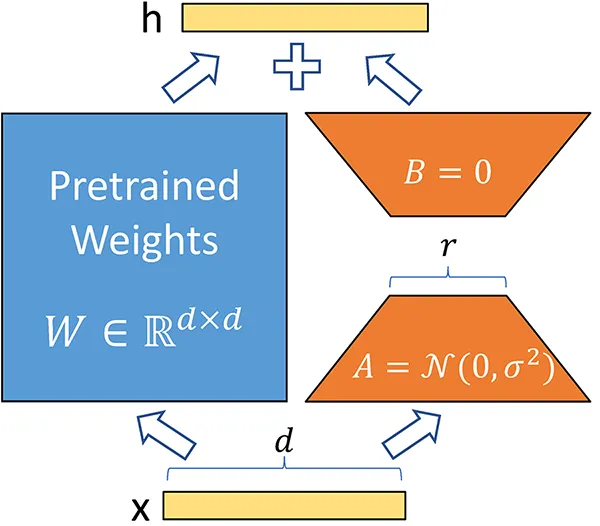

Apple has developed foundation language models to enhance Apple Intelligence across iOS, iPadOS, and macOS. These models consist of a 3 billion parameter on-device version and a more powerful server-based variant, both designed for optimal efficiency and versatility. The training process involves core pre-training on 6.3 trillion tokens, followed by continued pre-training with longer sequence lengths and context lengthening. For post-training, supervised fine-tuning and reinforcement learning from human feedback (RLHF) are employed, utilizing advanced techniques such as the iterative teaching committee (iTeC) and mirror descent with leave-one-out estimation (MDLOO). The models are further specialized using LoRA adapters, making them well-suited for on-device applications. Benchmark results indicate that the AFM-on-device model outperforms larger open-source models, while the AFM-server model competes with GPT-3.5. Both models excel in safety evaluations, underscoring Apple’s commitment to responsible AI practices.